In entrepreneurship education, a student’s ability to reflect on their stakeholders—and themselves—is critical. However, traditional methods for capturing this reflection are flawed.

Standard pre- and post-course questionnaires often result in “foggy” data. Students rush through them, providing generic, shallow answers that lack genuine introspection or empathy. “Lighthouse” was built to solve this depth problem by transforming a static form into a dynamic, AI-guided conversation.

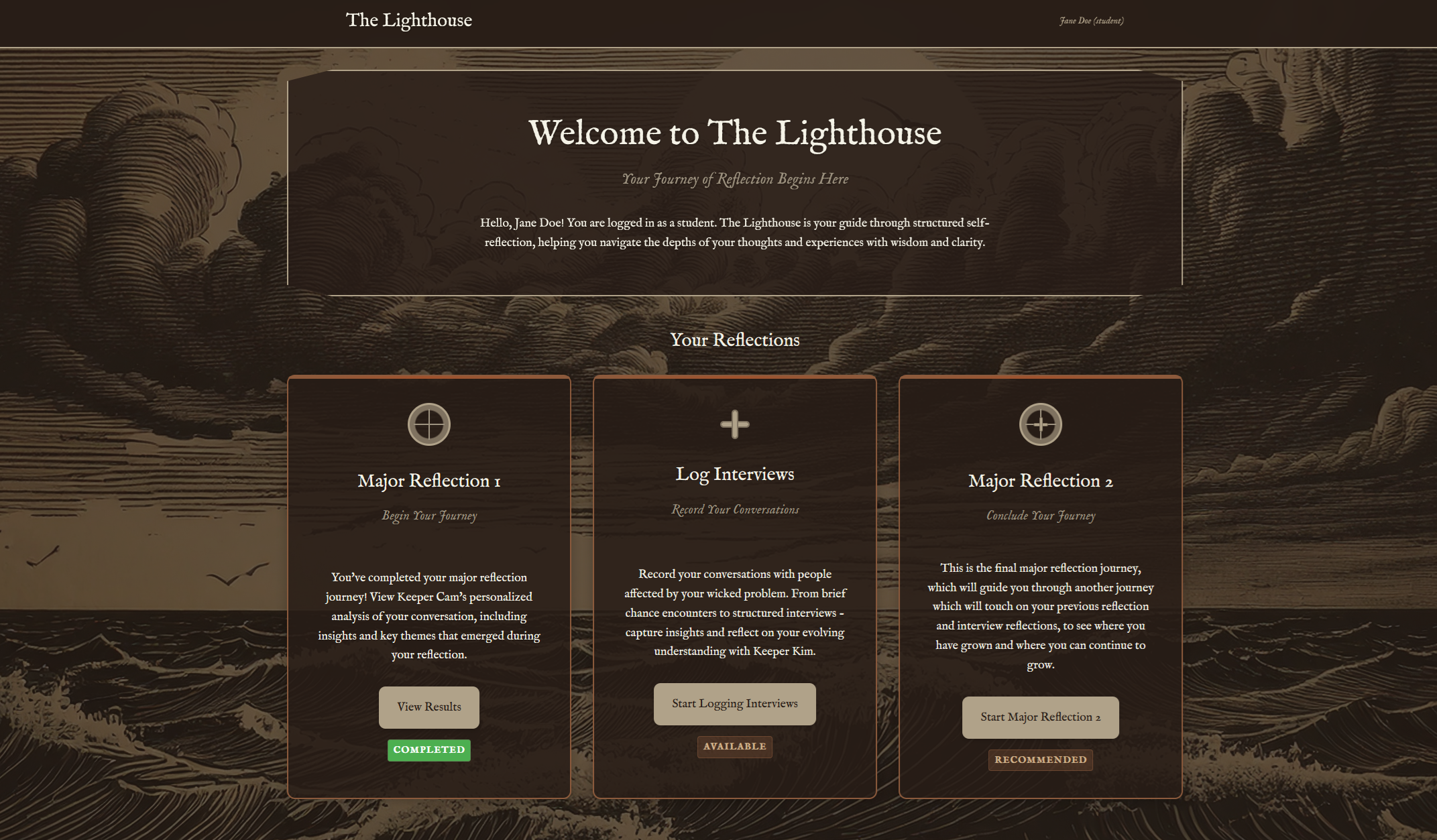

The Solution: “Keeper Cam”

Lighthouse replaces the standard form with an interactive persona named Keeper Cam. Instead of simply collecting answers, Cam acts as a conversational partner.

It guides students through the course’s preset reflection questions, but with a critical twist: it pushes back. If a student provides a lacking or superficial answer, the AI detects this and dynamically generates follow-up questions to probe for deeper detail. This forces the student to articulate their thoughts more clearly, resulting in a dataset that is significantly richer than standard surveys.

Once the reflection is complete, a separate model condenses the conversation into a structured report for the student, providing immediate value to promote reflection rather than just data extraction.

Engineering the “Dual-Agent” Architecture

To achieve a balance between structured data collection and natural conversation, I engineered a Dual-Agent Architecture using the Anthropic Claude API.

1. The Analyst (Quality Control)

The first agent runs silently in the background. It does not speak to the student. Its sole job is to analyze the student’s latest input against the learning objectives. It determines if the answer has sufficient “depth” and “empathy” based on a rubric.

2. The Interviewer (Keeper Cam)

The second agent receives instructions from the Analyst. If the Analyst flags an answer as shallow, the Interviewer generates a specific probing question (e.g., “You mentioned the stakeholder was frustrated—can you elaborate on what specific body language gave that away?”). This separation of concerns prevents the AI from getting “distracted” and ensures the pedagogical goals are met.

Infrastructure: Optimizing for Constraint

Deploying AI applications in a university setting requires navigating strict data sovereignty and resource constraints.

The “2GB” Challenge

We deployed to UQ Zones, a secure, on-premise Linux environment, to ensure student data remained within university servers. However, this came with a hard limit of 4GB RAM, which had to be split between development and production environments.

This left only 2GB of RAM for the production application. To make a modern Python/AI application viable in this constrained environment, I moved away from standard development servers and configured a production-grade stack:

- Nginx as a reverse proxy to handle static assets efficiently.

- uWSGI as the application server, tuned to manage worker processes tightly within the memory limit.

Scalability & Load Testing

To validate that this constrained architecture could handle a live classroom, I built a custom load-testing framework using Locust. I simulated 100+ concurrent users engaging in multi-turn AI conversations. The tests confirmed that while the 2GB limit induced minor latency at peak load, the system remained stable and successfully preserved conversation state across all sessions.

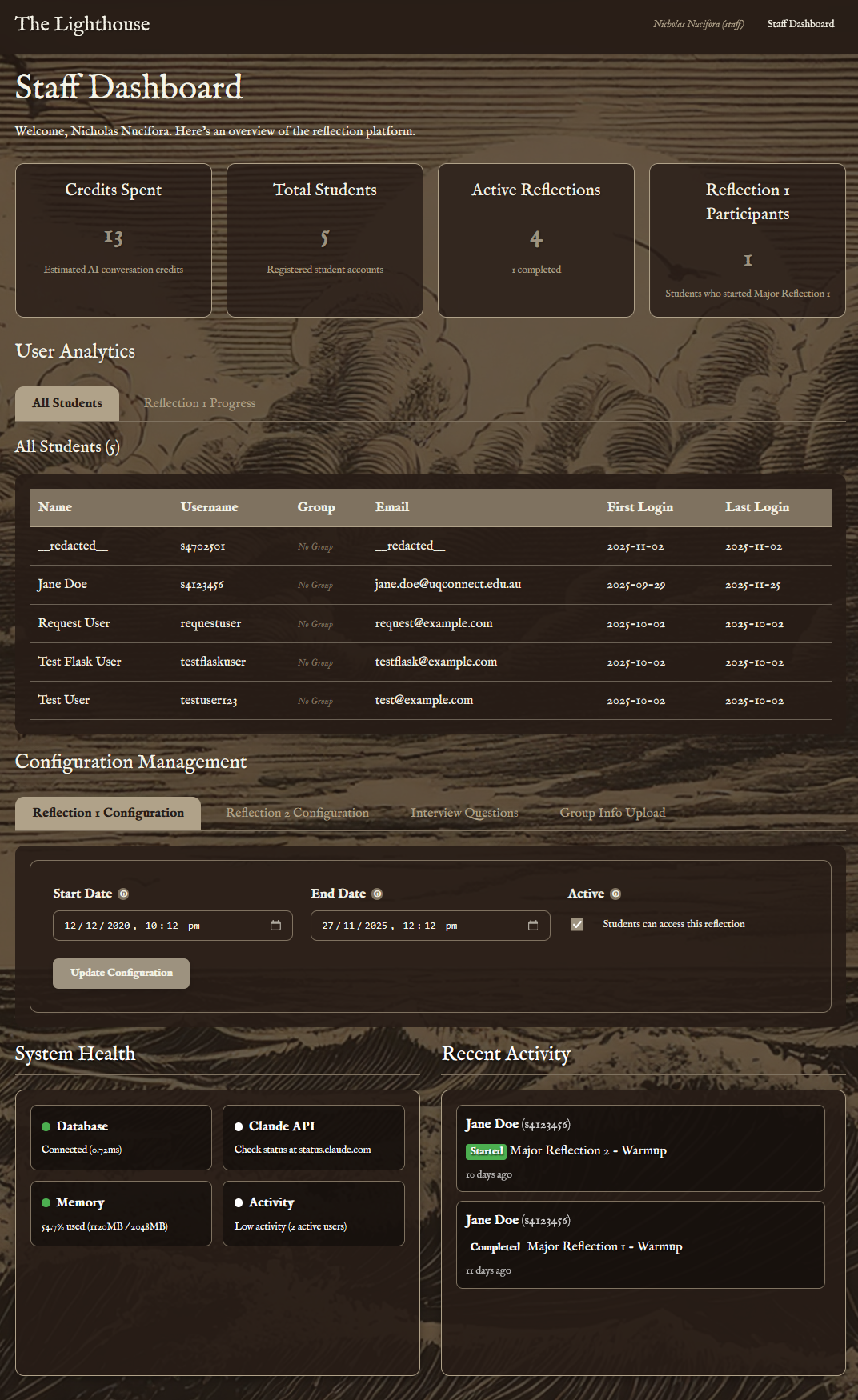

The Teacher Experience

While the AI handles the students, the platform provides a comprehensive management suite for the teaching staff. I developed a dashboard that allows staff to:

- Monitor Reflections: View student conversations and AI-generated summaries in real-time.

- Manage Groups: Digitally organize students into stakeholder interview groups (previously a manual spreadsheet task).

- Configure the AI: Modify the core interview questions and deadlines without touching the codebase.

Ethics & Privacy

Balancing innovation with privacy is our primary challenge. While the system currently uses external APIs for the intelligence layer, we strip all direct identifiers (Student IDs, names) from the prompt payload. The conversation history is stored locally on UQ servers, not retained by the model providers.

We also implemented safety guardrails: the AI monitors for sensitive topics (e.g., mental health distress) and is programmed to de-escalate and redirect students to university support services rather than attempting to counsel them.

Future Roadmap

The project is currently in the MVP testing phase, targeting a full rollout for Semester 1, 2026. Future development includes a “Final Reflection” module that uses the semester’s cumulative data to show students how their empathy metrics have evolved over time.

Tech Stack

- Backend: Flask (Python), SQLAlchemy, MySQL.

- Infrastructure: Nginx, uWSGI, UQ Zones (Linux).

- AI: Anthropic Claude API (Dual-Agent System).

- Testing: Locust (Load Testing).

- Frontend: JavaScript.